Introduction to LangChain

Large Language Models (LLMs) like GPT have completely changed how we build applications. But let’s be honest—calling an LLM API directly only gets you so far. What if you want memory, tools, multiple steps, or decision-making logic? That’s exactly where LangChain enters the picture.

Think of LangChain as the glue that turns raw AI models into real, production-ready applications.

What Problem Does LangChain Solve?

LLMs are powerful but stateless. They don’t remember past conversations, can’t easily call APIs, and struggle with complex workflows. LangChain solves this by providing a structured framework to connect prompts, models, tools, memory, and logic into a single pipeline.

Evolution of LLM-Based Applications

Early AI apps were simple Q&A bots. Today, we build AI agents, autonomous workflows, and enterprise-grade solutions. LangChain accelerates this evolution—especially when paired with Python.

What Is LangChain?

Definition of LangChain

LangChain is an open-source framework designed to build applications powered by large language models. It allows developers to chain together prompts, models, external tools, memory, and logic into a cohesive system.

Official site:

👉 https://www.langchain.com

Documentation:

👉 https://python.langchain.com

Core Philosophy Behind LangChain

LangChain is built around one idea: LLMs are more powerful when they work with other systems—databases, APIs, files, and even other LLM calls.

Real-World Use Cases of LangChain

- AI chatbots with memory

- Document summarization systems

- AI-powered search engines

- Automated coding assistants

- SEO and content automation pipelines

Key Components of LangChain

Prompts and Prompt Templates

Prompts define how you talk to the LLM. Templates allow you to reuse and dynamically inject variables.

Chains

Chains connect multiple steps together. Output from one step becomes input to another—like a conveyor belt for intelligence.

Agents

Agents decide what to do next. They can choose tools, make decisions, and adapt dynamically.

Memory

Memory allows applications to remember previous interactions—crucial for chatbots and assistants.

Tools and Integrations

LangChain integrates with:

- OpenAI

- Hugging Face

- Pinecone

- FAISS

- Google Search

- Custom APIs

Why Python Is the Preferred Language for LangChain

Python’s Dominance in AI and ML

Python is the undisputed king of AI. From TensorFlow to PyTorch, almost every major AI innovation starts in Python. LangChain naturally follows this trend.

Rich Ecosystem and Libraries

Python offers:

- NumPy

- Pandas

- FastAPI

- Flask

- SQLAlchemy

These integrate seamlessly with LangChain.

Simplicity and Developer Productivity

Python reads like English. That means:

- Faster development

- Fewer bugs

- Easier onboarding

Community and Open-Source Support

The Python LangChain community is massive and fast-moving. Most examples, tutorials, and updates land in Python first.

LangChain with Python – Architecture Overview

How LangChain Works Internally

At a high level:

- User input enters the system

- Prompt template formats input

- LLM processes the prompt

- Output flows through chains or agents

- Memory stores context

Request Flow in a Python-Based LangChain App

Input → Prompt → Chain → Tool/API → LLM → Response

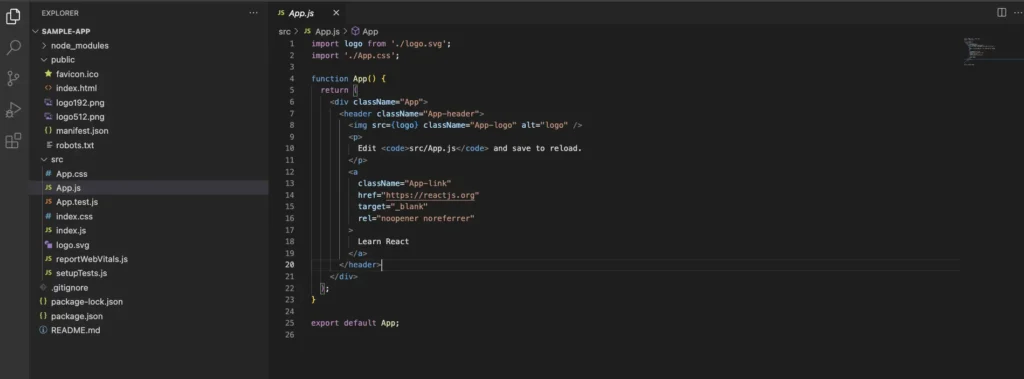

Installing LangChain in Python

Prerequisites

- Python 3.9+

- pip

- Virtual environment (recommended)

Installation Steps

pip install langchain openaiSimple LangChain Example in Python

Basic Prompt and LLM Example

from langchain.llms import OpenAI

llm = OpenAI(temperature=0.7)

response = llm("Explain LangChain in simple words")

print(response)

Simple, clean, powerful—this is Python magic.

Chain-Based Example

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

from langchain.llms import OpenAI

prompt = PromptTemplate(

input_variables=["topic"],

template="Explain {topic} in simple terms"

)

chain = LLMChain(

llm=OpenAI(temperature=0.5),

prompt=prompt

)

print(chain.run("LangChain"))

Advanced LangChain Example with Memory

Conversational Memory Example

from langchain.memory import ConversationBufferMemory

from langchain.chains import ConversationChain

from langchain.llms import OpenAI

memory = ConversationBufferMemory()

conversation = ConversationChain(

llm=OpenAI(temperature=0.6),

memory=memory

)

conversation.predict(input="Hi, my name is Pradeep")

conversation.predict(input="What is my name?")

Why Memory Matters in AI Apps

Without memory, chatbots feel robotic. With memory, they feel human.

LangChain Use Cases in Real Projects

Chatbots and Virtual Assistants

Used in customer support, HR automation, and personal assistants.

Document Question Answering

Upload PDFs, index them, and ask questions like Google—on steroids.

API Automation and Workflow Orchestration

LangChain agents can trigger APIs, run scripts, and automate tasks.

Search and Knowledge Base Systems

Integrate LangChain with vector databases like Pinecone or FAISS.

LangChain vs Traditional AI Development

Development Speed Comparison

Traditional AI apps take weeks. LangChain apps take days—or even hours.

Scalability and Maintainability

LangChain enforces structure, making apps easier to scale and maintain.

SEO and Content Automation with LangChain

AI-Powered Content Pipelines

LangChain can:

- Generate SEO articles

- Optimize keywords

- Rewrite content

- Schedule publishing

Keyword Research and Optimization

Combine LangChain with SEO APIs to automate ranking-focused content.

Best Practices for Using LangChain with Python

Prompt Engineering Tips

- Be specific

- Use examples

- Avoid vague instructions

Error Handling and Monitoring

Always log prompts and responses in production.

Performance Optimization

- Cache responses

- Limit token usage

- Use async chains

Official Resources and References

Documentation and Tutorials

Community Links

- GitHub Discussions

- Discord Community

- Stack Overflow

Future of LangChain and Python

Trends in LLM Frameworks

Agents, multimodal AI, and autonomous workflows are the future.

Why Python Will Continue to Lead

Python evolves with AI. As long as AI grows, Python stays on top.

Conclusion

LangChain transforms raw language models into intelligent systems, and Python is the perfect partner for this journey. With its simplicity, ecosystem, and AI-first mindset, Python makes LangChain development fast, scalable, and future-proof. If you’re serious about building AI-powered applications that rank, scale, and perform—LangChain with Python is the way forward.

We provide reliable online remote job support for LangChain-based projects, helping developers and teams build, debug, and scale AI applications with confidence. Whether you’re working on chatbots, agents, document Q&A, or production deployments, our experts are here to support you end to end. Connect with us on WhatsApp at +91-8527854783 to get quick, personalized assistance and keep your project moving forward.

FAQs

1. Is LangChain only available in Python?

No, but Python has the most mature and widely used implementation.

2. Is LangChain suitable for production systems?

Yes, many companies already use it in production.

3. Can LangChain work with FastAPI?

Absolutely. It’s a common and powerful combination.

4. Do I need deep AI knowledge to use LangChain?

Not really. Basic Python and API knowledge is enough to start.

5. Is LangChain free to use?

Yes, LangChain is open-source and free.